A pornoagraphy PIP promoting child pornography in the attached image, instructing potential customers to go to author's homepage for more resources.

In this study, we reveal, for the first time, popular online social networks (especially Twitter) are being extensively abused by miscreants to promote illicit goods and services of diverse categories. This study is made possible by multiple machine learning tools that are designed to detect and analyze Posts of Illicit Promotion (PIPs) as well as reveal their underlying promotion campaigns. Particularly, we observe that PIPs are prevalent on Twitter, along with extensive visibility on other three popular OSNs including YouTube, Facebook, and TikTok. For instance, applying our PIP hunter to the Twitter platform for 6 months has led to the discovery of 12 million distinct PIPs which are widely distributed in 5 major natural languages and 10 illicit categories, e.g., drugs, data leakage, gambling, and weapon sales. Along the discovery of PIPs are 580K Twitter accounts publishing PIPs as well as 37K distinct instant messaging accounts that are embedded in PIPs and serve as next hops of communication with prospective customers. Also, an arms race between Twitter and illicit promotion operators is also observed. Especially, 90% PIPs can survice the first two months since getting published on Twitter, which is likely due to the diverse evasion tactics adopted by miscreants to masquerade PIPs.

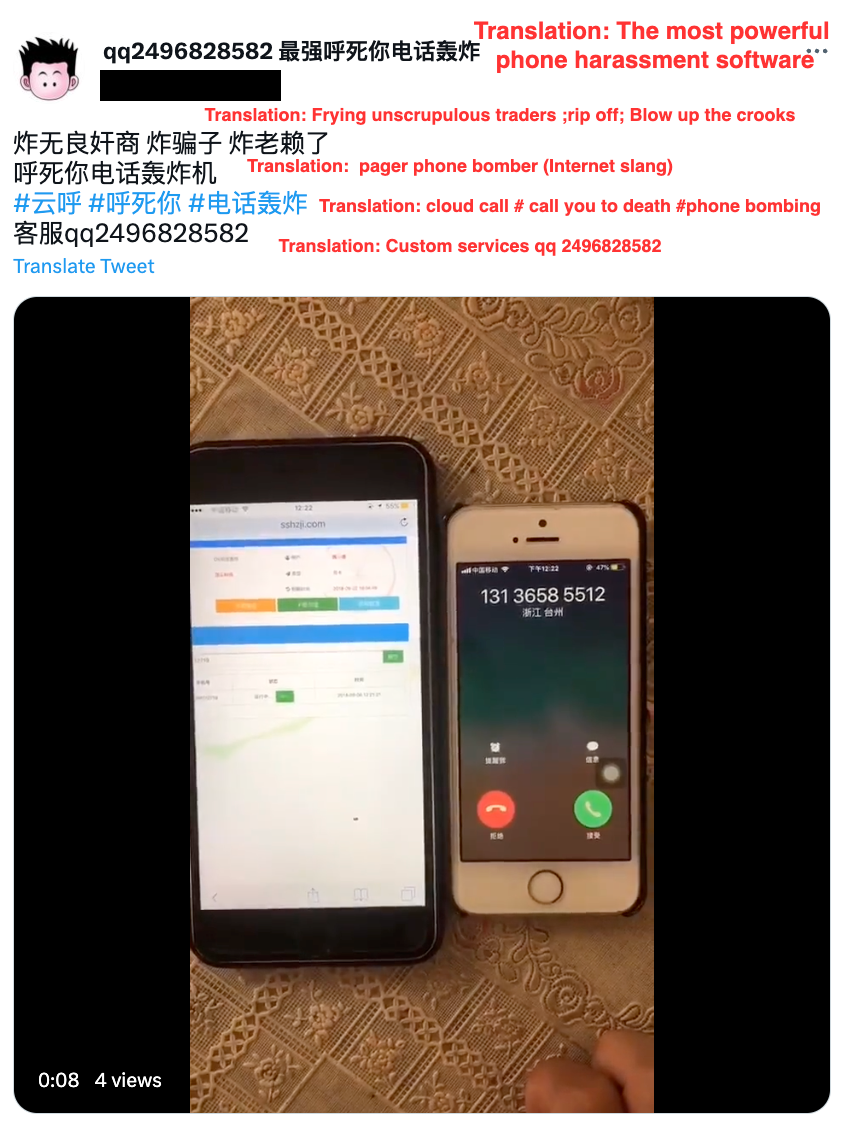

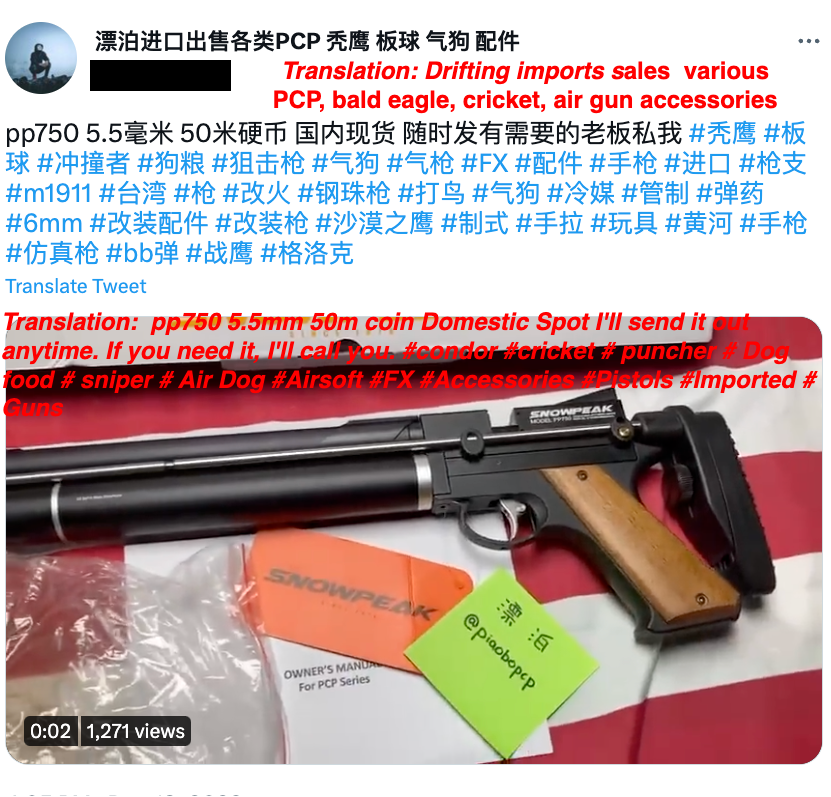

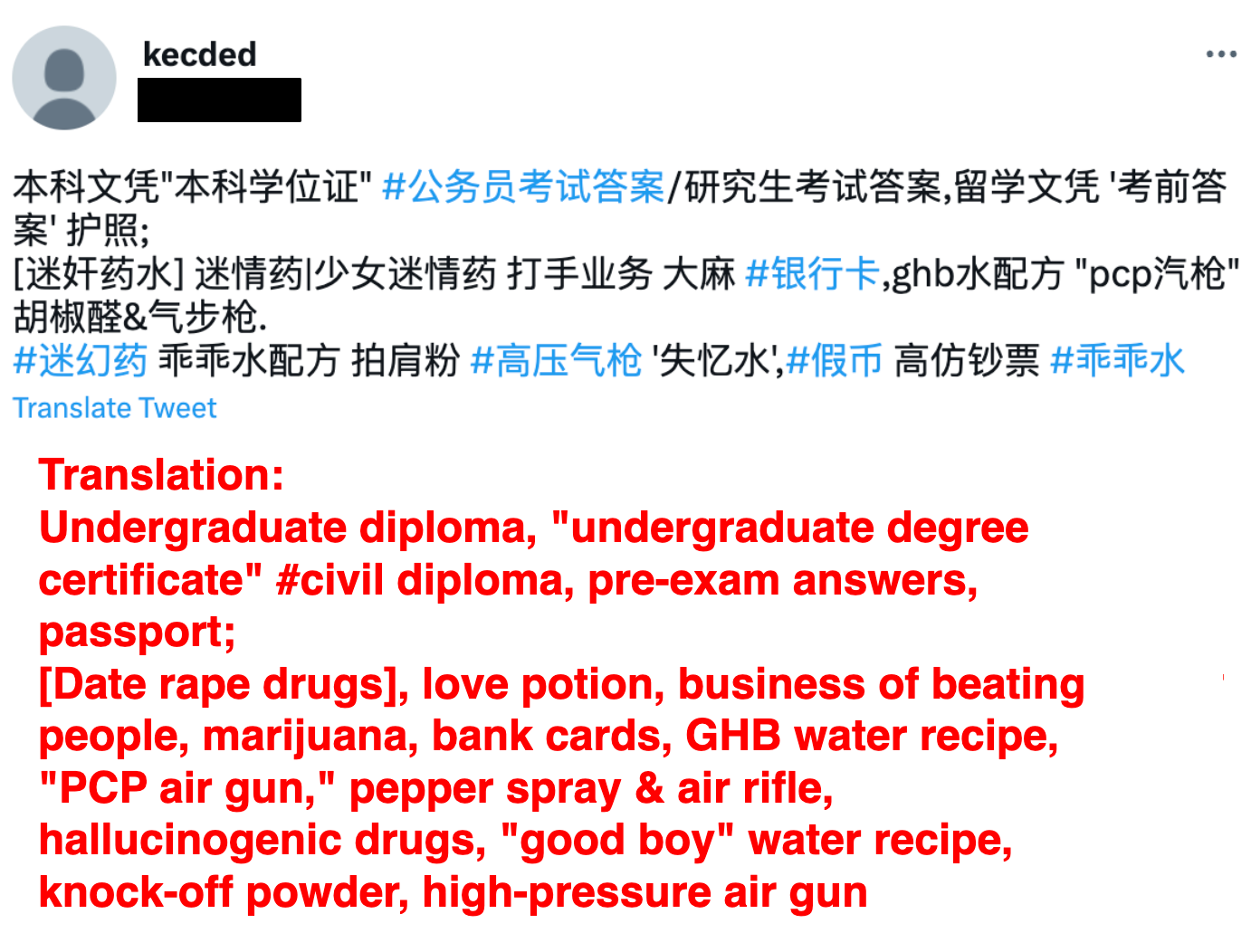

Here we show some PIP examples for each category.

A pornoagraphy PIP promoting child pornography in the attached image, instructing potential customers to go to author's homepage for more resources.

An illegal drug PIP promoting methamphetamine in Thai.

A harassment PIP promoting phone bombing services.

A weapon PIP promoting various weapons including but not limited to pp750.

A data leakage PIP selling photo IDs.

A PIP promoting multiple illict goods and services.

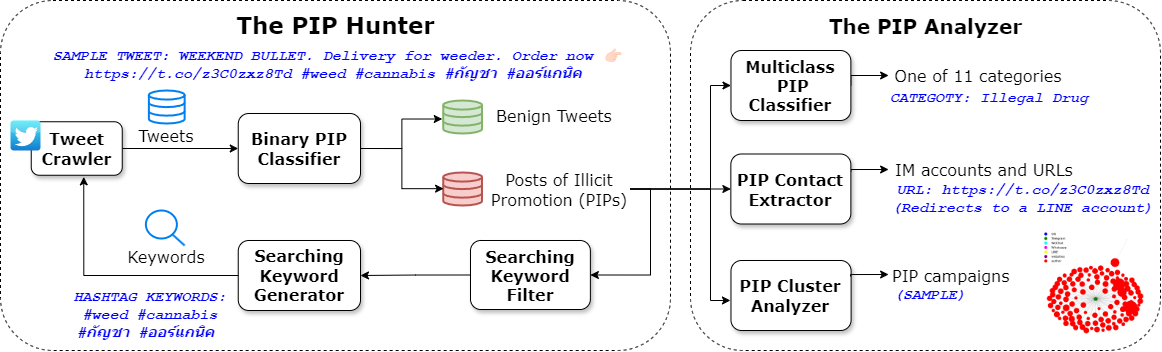

An overview of the methodology to capture and analyze posts of illicit promotion (PIPs) on Twitter.

As illustrated in the figure above, the methodology to fulfill our research is comprised of two key modules. One is the PIP hunter, an automatic pipeline to capture illicit promotion tweets as well as collecting the relevant Twitter accounts. The other is the PIP analyzer, which is designed to profile PIPs with regards to their categories of illicit services and goods, next-hop contacts, and the underlying campaigns.

the PIP hunter consists of a cycle of four steps. To start, it searches Twitter with PIP-relevant keywords, which is followed by a binary PIP classifier that takes a multilingual tweet text as the input and decide whether it is a PIP or not. Given PIPs identified, the third step is to evaluate the quality of existing PIP keywords and exclude ones of a low PIP hit rate. Then, the last step is to generate keywords from newly captured PIPs and append them to the keyword set so as to boost the next round of PIP hunting.

The PIP analyzer includes a multi-class classifier to reveal what kinds of illicit goods and services have been promoted in PIPs, a PIP contact extractor to retrieve from PIPs the embedded next hops to communicate with illicit promotion operators, and a PIP cluster to group PIPs into clusters and thus help reveal the campaigns underpinning PIPs.

To train and evaluate the PIP classifiers mentioned above, a ground truth dataset is collected through an iterative labeling process. Specifically, The labeling process involves two labelers independently annotating samples and resolving conflicts periodically. Also, an iterative process is followed for labeling, involving 1) searching Twitter with PIP-relevant keywords, which gives crawled tweets; 2) labeling a sampled subset of the crawled tweets to update ground-truth; 3) training a weak PIP classifier using the updated ephemeral ground-truth; 4) applying the weak PIP classifier to predict crawled tweets that are unlabeled, identifying both false positive and false negative predictions; and 5) updating ground-truth accordingly. Besides, when a sample is labeled, it is assigned with not only the binary PIP class, but also one of the PIP categories. This iterative process continues until no new PIP categories emerge, each PIP category is well represented in ground-truth and the PIP classifier has achieved a good performance when evaluated on the crawled tweets. Furthermore, inter-rater agreement rate over 90% is achieved across all labeling tasks. Particularly, for 1,000 samples from ground-truth, the agreement rate is 99.8%.

To facilitate reproduction of our machine learning models (e.g., the binary PIP classifier and the NER-based contact extractor), the source code for training and testing along with the respective ground truth datasets are avalaible at this link. Also, to avoid misuse by miscreants, the whole dataset of PIPs and thier contacts will be provided upon request and background checking. Please contact the authors to request access.

@misc{wang2024illicit,

title={Illicit Promotion on Twitter},

author={Hongyu Wang and Ying Li and Ronghong Huang and Xianghang Mi},

year={2024},

eprint={2404.07797},

archivePrefix={arXiv},

primaryClass={cs.CR}

}